About Me

I'm an independent researcher focused on AI safety, working to develop a mechanistic understanding of how large language models process and reason about information. My research covers machine unlearning, modularity, and understanding planning in transformers - all aimed at understanding how these systems work on a larger scale.

With a background in theoretical physics and olympiad mathematics, I combine research with practical engineering. I've built everything from quantum spin simulations to full-stack web apps and mobile applications. These days I mostly write Python for ML research, but I'm comfortable working across the stack.

Main Projects

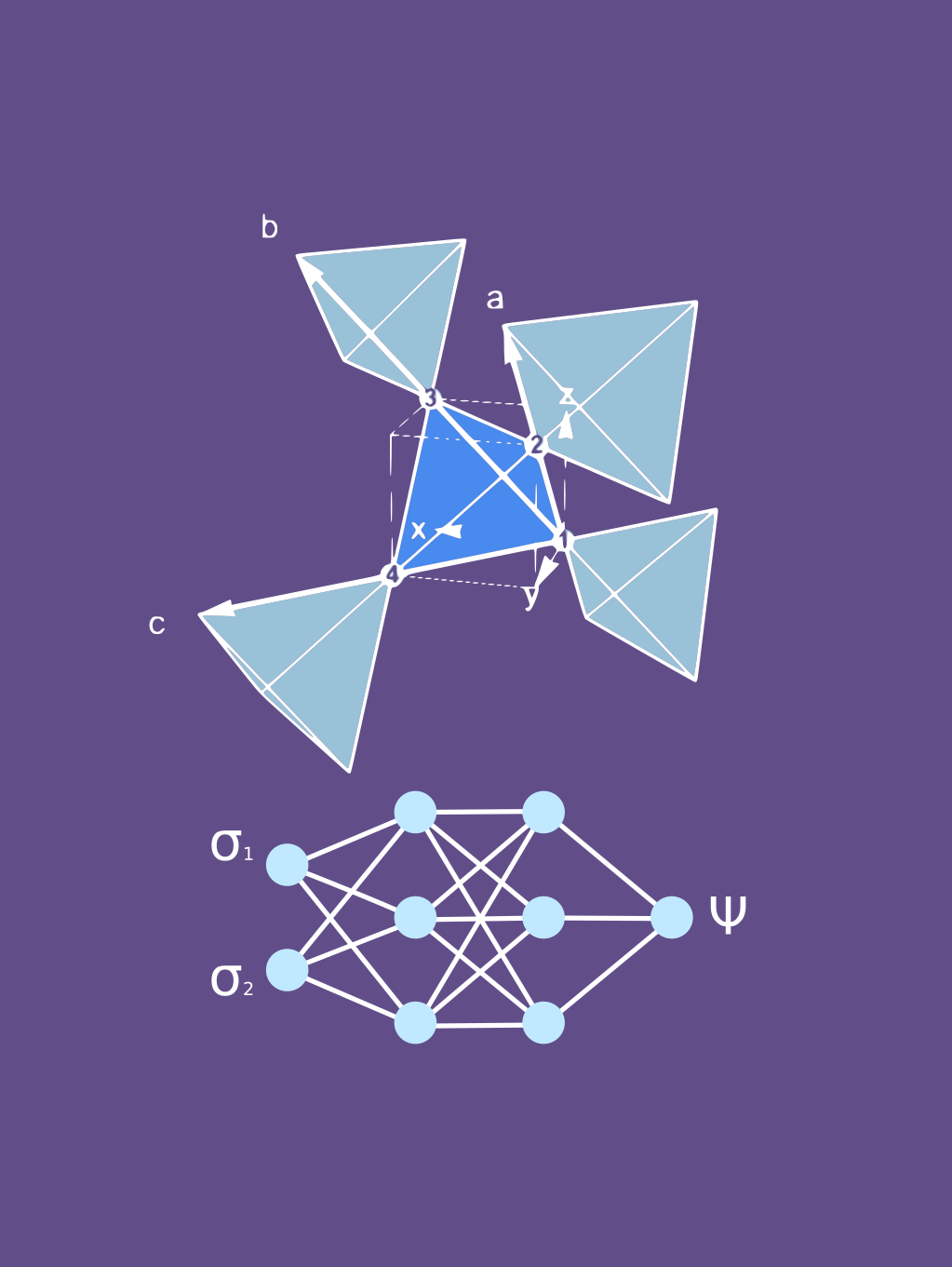

ParaScopes: Using Residual Stream Decoders to find Planning in Language Models

Helped understand and decode how LLMs "plan" information by analyzing and decoding single-token residual stream activations. Used 2 different methods: 1) using the original model to decode the residuals stream, and 2) using a map to text auto-encoder space.

View Project

Extracting Paragraphs from LLM Token Activations

Investigated how language models encode and plan paragraph-level information by analyzing single-token activations. Developed methods to extract and transfer contextual information between different prompts using activation patching techniques. Demonstrated that models maintain strong contextual awareness during text generation.

View Project

Dissecting Language Models: Machine Unlearning via Selective Pruning

Introduced a novel machine unlearning method for LLMs that uses selective pruning to remove specific capabilities while retaining others. The method identifies and removes neurons based on their relative importance to targeted capabilities vs overall network performance. Demonstrated state-of-the-art results in selective capability removal while maintaining model performance on other tasks.

View Project

Modularity in Transformers: Investigating Neuron Separability & Specialization

Researched modularity and task specialization within transformer architectures, focusing on both vision and language models. Combined selective pruning and clustering techniques to analyze how different neurons specialize in various tasks. Found evidence of task-specific neuron clusters with varying degrees of overlap between related tasks.

View Project

Investigating Neuron Ablation in Attention Heads

Developed a novel 'peak ablation' method for analyzing attention mechanisms in transformer models. Compared different neuron ablation techniques and their effects on model performance. Research provided insights into how attention neurons represent information and how different ablation methods affect model behavior.

View Project

Machine Learning for Many-Spin Quantum Systems

Worked on finding the ground state of quantum spins in different lattice configurations using different algorithms, in particular, looking at how different neural network models may be a way of getting better results.

View Project

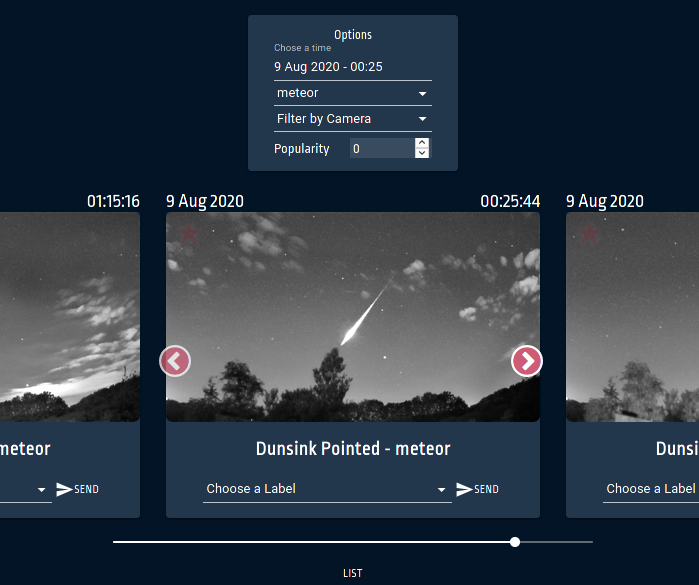

Meteor Website

Created an automated website for looking through a database of meteor images and allowing user input

View Project

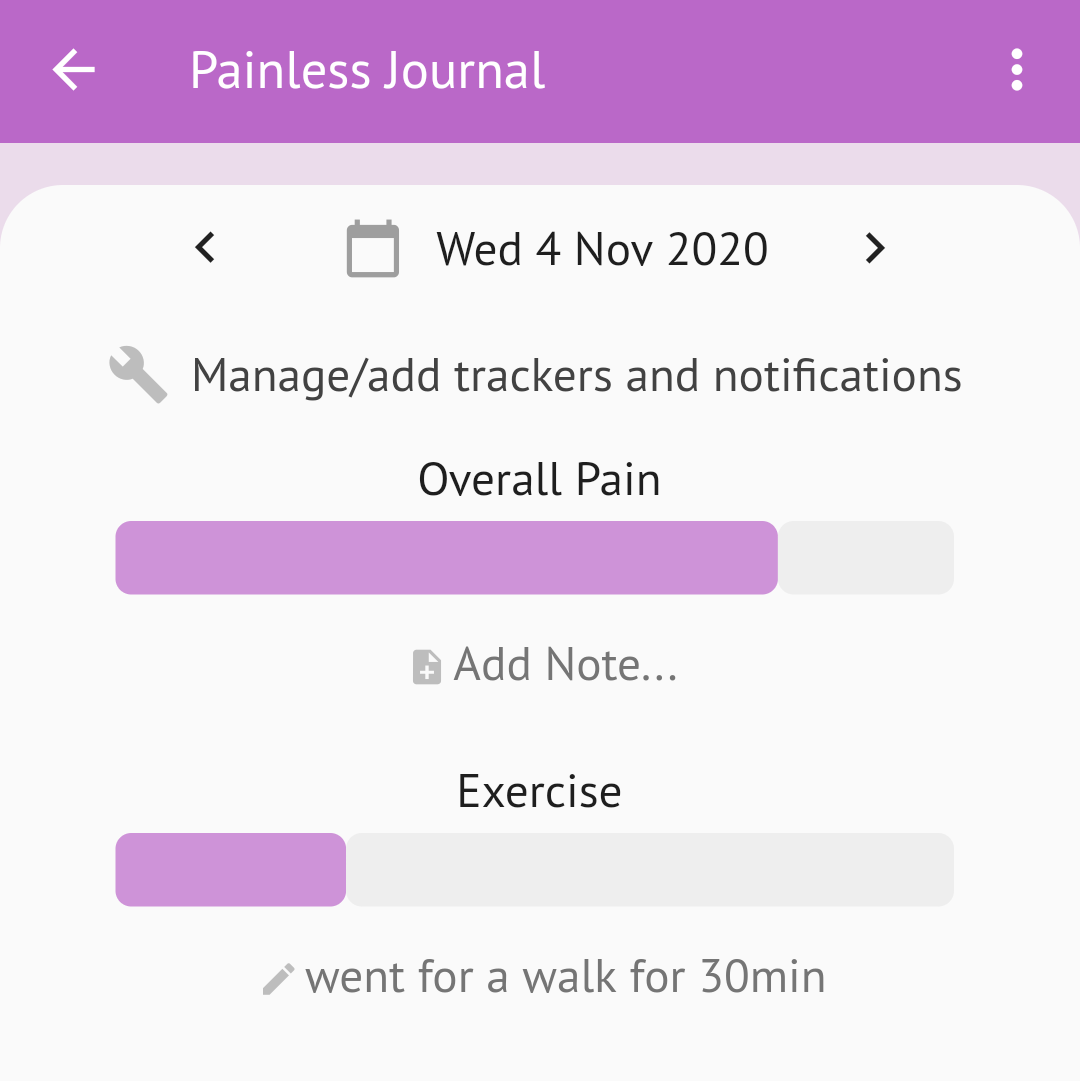

Painless Journal App

Worked in a team to develop a Flutter App for tracking and managing chronic pain symptoms

View Project

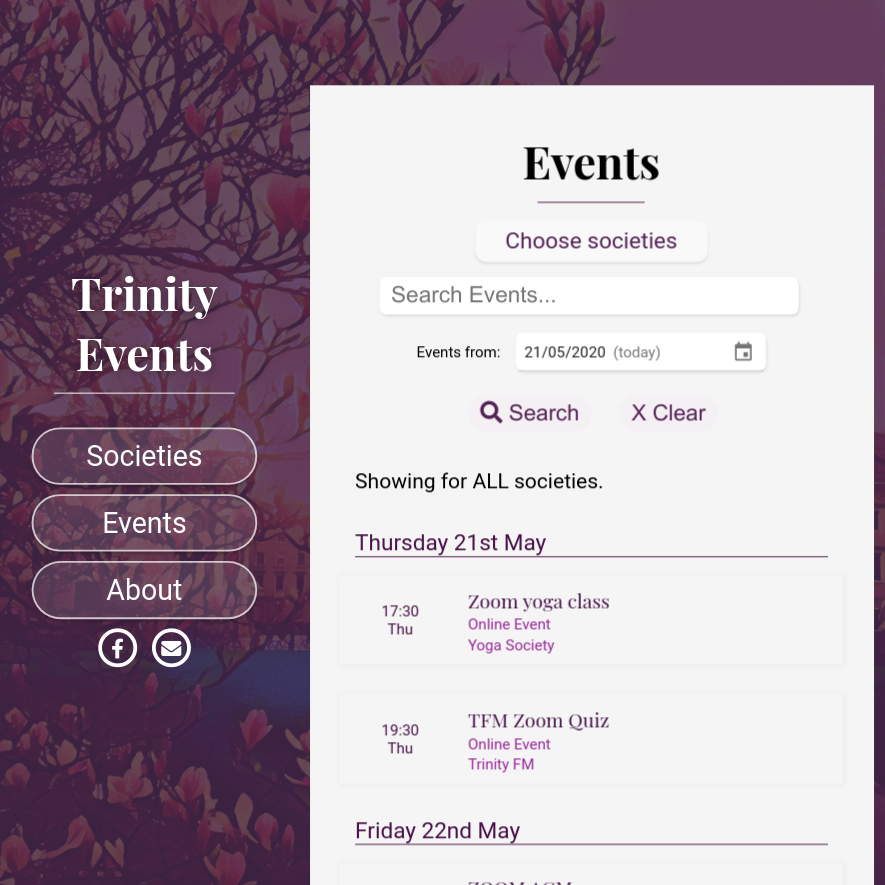

Society Events Website

A website that scrapes and saves data from facebook to connect students to events in Trinity College.

View Project

SGX Twilio

Created a bridge from twilio phone numbers to XMPP to the specification of Soprani.ca that allows the use of twilio phone numbers for normal texting.

View Project

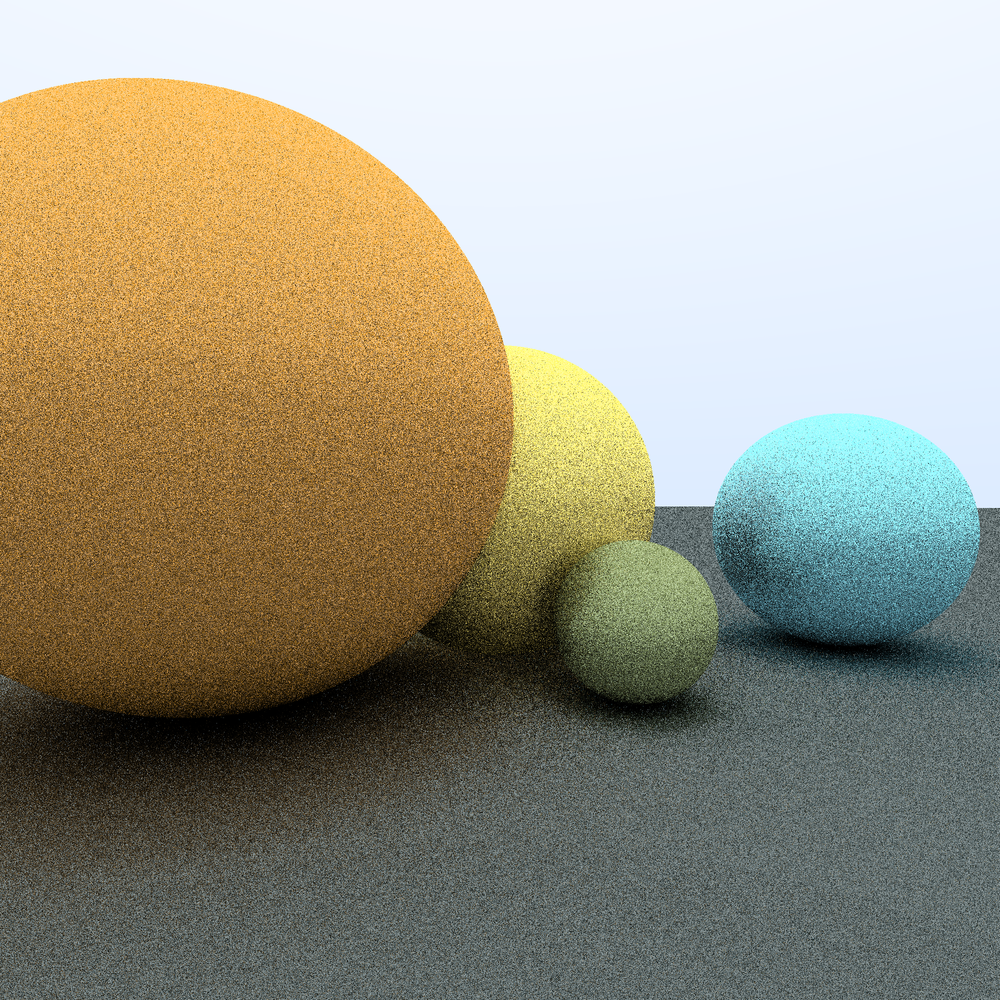

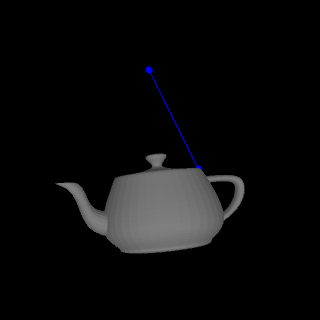

Ray Tracing Physics Project

Studied the physics of ray-tracing simulation in a team and built a C++ program to better study implementation.

View Project

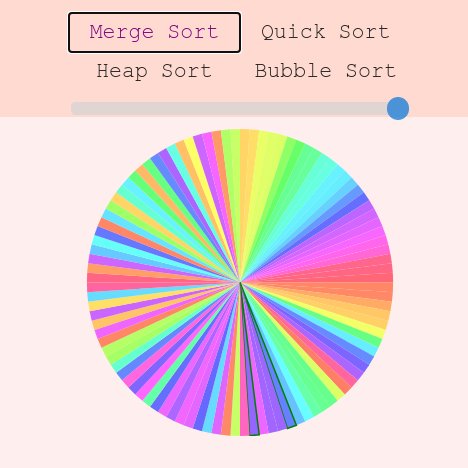

Sorting Algorithm Visualizer

A tool for visualising common sorting algorithms: Merge, Quick, Heap & Bubble Sort.

View Project

Rigid Body Simulation

Created a 3D interactive rigid-body simulator using advanced integration techniques, such as Green's Theorem.

View Project